Get The Memo.

2/Mar/2024

Video: https://youtu.be/BQ8ykNyZ6LY1https://youtu.be/BQ8ykNyZ6LY Date: 2/Mar/2024

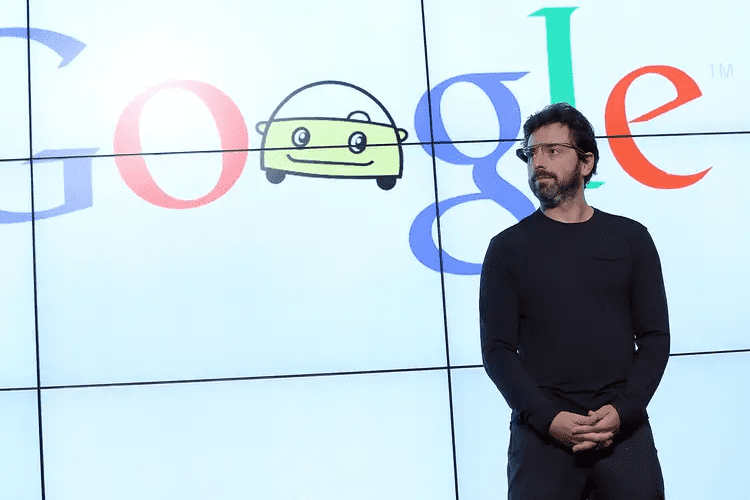

Title: Sergey Brin on Gemini 1.5 Pro (AGI House; March 2, 2024)

Transcribed by: OpenAI Whisper via MacWhisper

Edited by: Unedited, but some formatting by Claude 2.1 on Poe.com2Prompt: Split the content of this transcript up into paragraphs with logical breaks. Add newlines between each paragraph. Improve spelling and grammar. The speaker is Sergey: and add Question: whenever an audience member poses a question.

Full transcript (unedited, some formatting by Claude 2.1)

Sergey: It’s very exciting times. This model that, I think we’re playing with 1.5 Pro. Yeah, 1.5 Pro. We internally call it Goldfish. It’s sort of a little secret. I don’t actually, oh, I know why. It’s because Goldfish have very short memories. It’s kind of an ironic thing. But, when we were training this model, we didn’t expect it to come out nearly as powerful as it did or have all the capabilities that it does. In fact, it was just part of a scaling ladder experiment. But, when we saw what it could do, we thought, hey, we don’t want to wait. We want the world to try it out. And I’m grateful that all of you here are here to give it a go. What else did I say? Let me introduce somebody else. What’s happening next? I think a few of them probably have a lot of questions. Oh, good. Okay, quick questions. I’m probably going to have to defer to technical experts on those things. But, fire away. Any questions?

Question: So, what are your reflections on the Gemini art happening?

Sergey: The Gemini art? Yeah, with the… Okay. That wasn’t really expected. But, you know, we definitely messed up on the image generation. And I think it was mostly due to just not thorough testing. And it definitely, for good reasons, upset a lot of people on the images you might have seen. I think the images prompted a lot of people to really deeply test the base text models. And the text models have two separate effects. One thing that’s quite honestly, if you deeply test any text model out there, whether it’s ours, Jiaqi, T, Rock, what have you, it’ll say some pretty weird things that are out there that definitely feel far left, for example. And kind of any model, if you try hard enough, can be prompted to in that regime. But also, just to be fair, there’s definitely work in that model. So, once again, we haven’t fully understood why it means “left” in many cases. And that’s not our intention. If you try it, starting over this last week, it should be at least 80% better of the test cases that we’ve covered. So, I might all be to try it. This should be a big effect. The model that you’re trying, the Gemini 1.5 Pro, which isn’t in the sort of public-facing app, the thing we used to call “Bars”, shouldn’t have much of that effect, except for that general effect that if you sort of red-team any AI model, you’re going to get weird corner cases. But we’re not, even though this one hasn’t been thoroughly tested that way, we don’t expect it to have strong particular leanings. I suppose we can give it a go. But we’re more excited today to try out the long context and some of the technical features.

Question: With all the recent developments in multimodalities, have you considered a video chat GPT?

Sergey: A video chat GPT? We probably wouldn’t call it that. But, no, I mean, multimodal, both in and out, is very exciting. Video, audio, we run early experiments, and it’s an exciting field. Even the little, you guys remember the duck video that kind of got us in trouble? To be fair, it was fully displayed in the video, but it wasn’t real time. But that is something that we’ve actually done, is fed images and frame-by-frame to do how to talk about it. So, yeah, that’s super exciting. I don’t think we have anything like real time to present right now, today.

Question: Are you personally writing code for some projects?

Sergey: I’m not actually writing code, to be perfectly honest. It’s not code that you would be very impressed by. But, yeah, every once in a while, it’s all kind of debugging or trying to understand for myself how a model works, or to just analyze the performance in a slightly different way or something like that. But little bits and pieces that make me feel connected, it’s, once again, I don’t think you would be very technically impressed by it. But it’s nice to be able to play that. And sometimes I’ll use the AI bots to write code for me, because I’m rusty and they actually do a pretty good job. So, be very pleased by that. Okay, question in the back.

Question: Yes, I have a question. Juan, click around first. Okay, so pre-AI simulation, pre-AI, the closest thing we got to simulators was game engines. What do you think the new advances in the field mean for us to create better games or game engines in general? Do you have a view on that? Sorry, I was like a sign of just approval or anything. I’m AI. What can I say about game engines? I think obviously, on the graphics, you can do new and interesting things with game engines. But I think maybe the more interesting is the interaction with the other virtual players and things like that. Like whatever the characters are. I guess these days you can call people who are brand NPCs or whatever. But in the future, maybe NPCs will be actually very colorful and interesting. So, I think that’s a really rich possibility. Probably not enough of a gamer to think through all the possible futures with AI. And it opens up many possibilities.

Question: What kind of applications are you most excited about for people building on Gemini?

Sergey: Yeah, what kind of applications am I most excited about? I think just ingesting right now, for the version we’re trying to edit, the 1.5 Pro, the long context is something we’re really experimenting with. And whether you dump a ton of code in there or video, I mean, I’ve just seen people do… I don’t think a model can do this, to be perfectly honest. People dump in their code and do a video of the app and say, “Here’s the bug and the model will figure out where the bug is in the code.” Which is kind of mind-blowing, but that works at all. I honestly don’t really understand how a model does that. But, yeah, experimenting with things that really require the long context. Do we have the servers to support all these people here banging on it? We have people on the servers here as well. Okay. If my phone is buzzing, everybody’s really stressed out. It’s a customer too, right? Because the million context queries do take a bit of compute and time. But you should go for it. Yeah.

Question: You mentioned a few times that you’re not sure how this model works, or you weren’t sure that this could do the things that it does. Do you think we can reach a point where we actually understand how these models work, or will they remain black boxes and we just trust the makers of the model to not mess up?

Sergey: No, I think you can learn to understand it. I mean, the fact is that when you treat these things, there are a thousand different capabilities you could try out. So on the one hand, it’s very surprising that it could do it. On the other hand, if it’s any particular one capability, you couldn’t go back. We can look at where the attention is going at each layer, like the code and the video. We can’t deeply analyse it. Personally, I don’t know how far along the researchers have gotten to doing that kind of thing, but it takes a huge amount of time and study to really slice apart why a model is able to do some things. Honestly, most of the time that I see slicing, it’s like why it’s not doing something. So I guess I’d say it’s mostly because I think we could understand it, and people probably are, but most of the effort is spent figuring out where it goes wrong, not where it goes wrong. In computer science, there’s this concept of reflective programming. A program can look at its own source code, modify its own source code, and in AGI literature, there’s recursive self-improvements. So what are your thoughts on the implications of extremely long context windows in a language model being able to modify its own prompts, and what that has to do with autonomy and building towards AGI potentially?

I think it’s very exciting to have these things actually improve themselves. I remember when I was, I think in grad school I wrote this game where it was like the wall maze you were flying through, but you shot the walls, the walls corresponded to bits of memory, and it would just flip those bits, and the goal was to crash it as quickly as possible. Which doesn’t really answer your question, but that was an example of self-modifying code, I guess not for a particularly useful purpose, but I’d have people play that until the computer crashed. Anyhow, on your positive example, I see today people just using a talk about them. I think open loop could work for certain, I think for certain very limited domains today, like if you, without the human intervention to guide it, I bet it could actually do some kind of continued improvement. But I don’t think we’re quite at the stage where for, I don’t know, real serious things. First of all, the context is not actually enough for big code bases, to turn on the entire code base. But you could do retrieval and then augmentation editing. I guess I haven’t personally played with enough, but I haven’t seen it be at the stage today where a complex piece of code will just iteratively improve itself. But it’s a great tool, and like I said, with human assistance, we for sure do, I mean, I would use Gemini to try to do something with a Gemini code, even today, but not very open-loop deep sophisticated things, I guess.

Question: So I’m curious, what’s your take on several ultimate decisions, or plan at least, to raise seven trillion dollars? [Laughter] I’m just curious, how do you see that from obvious years of work?

Sergey: I saw the headline, I didn’t get too deep into it, I assumed it was a provocative statement or something. I don’t know, I guess it has to be for some trillion dollars. [Laughter] I think it was meant for chip development or something like that. I’m not an expert in chip development, but I don’t get the sense, that you can sort of pour money, like even huge amounts of money, in an outcome chips. I’m not an expert in the market at all.

Let me try, somebody rained the back. Okay, yes.

Question: So the training cost of rock, paper, mortar is super high, so how do you envision that?

Sergey: Oh, the training costs of balls are super high. Yeah, the training costs are definitely high, and that’s something companies like us have to cope with. But I think the long term utility is incomparably higher, like if you measure it on a human productivity level, if it saves somebody an hour of work over the course of a week, that hour is worth a lot. There are a lot of people using these things, or will be using them, but it’s a big bet on the future. It costs less than seven trillion dollars, right? [Laughter]

Question: Model training on device?

Sergey: Yeah, model running on device, we’ve shipped it to Android Chrome, and Pixel phones, I think even Chrome runs a pretty decent model these days. We just open sourced Gemma, which is a pretty small, I can’t remember what it is, Yeah, I mean it’s really useful, it can be low latency, not dependent on connectivity, and the small models can call bigger models in the cloud too, so an IPACOM device is a really good idea.

Question: What are some vertical flash industries that you feel like this gen-ed way is going to have a big impact on, and if it’s startups should consider hacking on those?

Sergey: Oh, which industries do I think have that big opportunity? I think it’s just very hard to predict, and there’s sort of the obvious industries that people think of, so the customer service, or kind of just like, you know, analyzing, I don’t know, like different lengthy documents, and kind of the workflow automation I guess, those are obvious, but I think there are going to be non-obvious ones, which I can’t predict, especially as you look at these sort of multi-modal models, and the surprising capabilities that they have, and I feel like, I mean that’s why we have all of you here, you guys are the creative ones to figure that out. Okay, you start there.

Question: I’m actually not on top of a price tag claim, I don’t expect that we will raise prices, because, I mean there are fundamentally a couple of trends, one is just that these, you know, there’s just optimizations and things around inference, that are just constantly like all the time, this 10% idea, this 20% idea, and like month after month that adds up. I think our TPUs are actually pretty damn good at inferencing, not distilling the GPUs, but for certain inference workloads, and that’s figured really nicely. And the other big effect is actually we’re able to make smaller models more and more effective, just with new generations, just whatever architectural changes, training changes, all kinds of things like that. So the models are getting more powerful, even at the same time size. So I would not expect prices to go up.

Question: AI, healthcare, and biotech. Well I think there are a couple very different ways, on the biotech side, people look at things like alcohol, and things like that, just like understanding the fundamental mechanics of life, and I think you’ll see AI do more and more of that, whether it’s actual physical, molecule, and bonding things, or reading and summarizing journal articles, things like that. I also think for patients, and this is kind of a tough area, honestly, because we’re definitely not prepared for our, just, AI is like, go ahead, ask any question, like we’re not, you know, AI is making mistakes and things like that. There is a future where you, if you can overcome those kinds of issues, where an AI can much more deeply spend time on an individual person, and their history, and all their scans, maybe mediated by a doctor or something, but actually give you just better diagnoses, better recommendations, things like that.

Question: And are you focusing on any other non-transformer eye pictures, or like reasoning, planning, or any of, to get better at their…

Sergey: I’m focusing on any non-transformer architectures. I mean, I think there’s like so many sort of variations, but I guess most people would argue are still kind of transformer based. I mean, I’m sure some of you, a couple of you, if you want to speak more to it, would be looking, but, yeah, as much progress as transformers have made over the last, or six, seven, eight years, I guess, there’s, you know, there’s nothing to say, there’s not going to be some new revolutionary architecture, and it’s also possible that just, you know, incremental changes, for example, sparsity and things like that, that are still kind of the same transformer, but also by roach and stuff. I don’t know, I don’t have a magic answer. Is there some bottleneck for like reasoning kind of questions? Using a bottleneck for transformers? I mean, there’s been lots of theoretical work showing the limitations of transformers, you know, you can’t do this kind of thing, this many layers and things like that. I don’t know how to extrapolate that to like, temporary transformers that usually don’t meet the assumptions of what theoretical works, so it may not apply, but, I’d probably hedge my bets and try other architectures, all this being cool. Okay, thank you. I’ll do what you want me to do. Thanks, yeah. I’m glad, I really wanted to do that. Yeah, thanks. On, I think, the model of, what was your other topic, but a long ago Google had this Google Glass, but now Apple has Vision Pro. I think Google Glass may be a little bit early, would you consider like trying to give that another shot? I feel like I have some Google Glass. No, no, but I feel like I made some bad decisions. Yeah, it was for sure early, and early in two senses of the word. Maybe early in the overall evolution of technology, but also, I think I, like, in hindsight I tried to push it as a product, but it was sort of more of a prototype, and I should have set those expectations around it, and I just didn’t know much about consumer hardware supply chains back then, and a bunch of things I wish I’d done differently. But I personally am still a fan of kind of the lightweight, kind of minimal display that that offered, like the QWER-LA versus the heavy things that we have today. That’s my personal preference, but the Apple Vision and the Oculus, for the matter, they’re very impressive, like having played with them, and I’m just impressed by what you can have in front of your screen. That was what I was personally going for, kind of back then.

Question: So did you see Gemini expanding capabilities into like 3D head down the line of space? Or computing in general, or a simulation of the world in general of that? And especially beyond Google Glass, who already have some product in the area, like Google Maps, Street View, Air, or all of that, do you see all of those have some synergies between them?

Sergey: Well, it’s a good question. To be honest, I haven’t thought about it, but now that you say it, there’s no reason we couldn’t. You know, could it more sort of, like, it’s kind of another mode, like 3D data. So, probably something interesting would happen. I mean, I don’t see why you wouldn’t try to put that into a model that’s already got all the smart supertext model, and now could turn on something else too. And by the way, maybe somebody’s doing it in Java, and I’ll stop and explain it to you. Because I’m not doing it in a way that I forgot about. It doesn’t mean it’s not happening. They asked a question about this.

Question: How optimistic will we be able to bring in text generating models, ability to hallucinate, and what do you think about the ethical issue of potentially spreading?

Sergey: Big problem right now. No question about it. I mean, we have made them hallucinate less and less over time. But I would definitely be excited to see a breakthrough that takes us to near zero. I don’t know, that’s not, you can’t just count on breakthroughs. So I think we’re going to keep going with the incremental kinds of things that we do to just bring all the who stations down, down, down over time. Like I said, I think a breakthrough would be good. Misinformation, you know, misinformation is a complicated issue, I think. I mean, obviously you don’t want your AI bots to be just making stuff up, but they can also be kind of tricked into, there’s a lot of, I guess, complicated political issues in terms of what people consider, what different people consider misinformation versus not. And it gets into kind of a broad social debate. I suppose none of them could consider us about them sort of deliberately generating misinformation on behalf of another actor. From that point of view, I mean, unfortunately it’s like, it’s very easy to make a lousy AI, like one that hallucinates a lot. And you can take, you know, any open source text model and probably tweak it to generate misinformation of all kinds and if you’re not concerned about the accuracy, it’s just kind of an easy thing to do. So I guess now I think about it, I mean detecting AI generated content is an important field and something that we work on and so forth, so you can at least maybe tell if something coming at you was AI generated. Yeah.

Question: Hi Alexandra. So the CEO of Nvidia said that basically the future of writing code as a career is dead. What is the take-home point? What do you think about potentially a second career?

Sergey: Okay, yeah. I thought that was going to say that. I mean that’s, like we don’t know where the future of AI has gone broadly. I would, you know, we don’t know, you know, it seems to help across a range of many careers, whether it’s graphic artists or customer support or doctors or executives or what have you. I mean, so I don’t know that I would be like singling out programming in particular. It’s actually probably one of the more challenging tasks for an LOO today. But if you’re talking about for decades in the future, what should you be kind of preparing for and so forth? I mean, it’s hard to say, and the AI quite good at programming, but you can say that about kind of any field of whatever. So I guess I probably wouldn’t have singled that out as like saying don’t study specifically programming. I don’t know if that’s an answer. Okay, in the back.

Question: If a lot of people start using these agents to write code, I’m wondering how that’s going to impact IT security. You could argue that like the code might become worse or like less checked for certain issues, or you could argue that like we get better at writing test suites which cover all the cases. What are your opinions on this? Like is maybe for the average programmer like IT security the way to go or is it like the code got to be written but someone needs to check it for the user?

Sergey: Oh wow, you guys are all trying to choose careers, basically. I think you should use a fortune teller for that general line of questions. But I do think that using an AI today to write let’s say unit tests is pretty straightforward. Like that’s the kind of thing the AI does really quite well. So I guess my hope is that AI will make code more secure, not less secure. It’s usually, in security to some extent the effect of people being lazy, and the little things that AI is kind of good at is not being lazy. So if I had to bet, I would say there’s probably a net bet to security with AI. But I wouldn’t discourage you from pursuing a career in IT security based on that. I’ll get a question in the back.

Question: Do you want to build AGI?

Sergey: Do I want to? Yeah? Yeah. I mean I think it’s, yeah different people mean different things about that. But to me the reasoning aspects are really exciting and amazing. And you know I kind of came out of retirement just because of the trajectory of AI. It was so exciting. And as computer scientists just seeing what these models can do year after year is astonishing. So yes.

Question: Any efforts on like humanoid robotics or these because there was so much progress in Google X like in 2015-16?

Sergey: Oh humanoid robotics. Boy we’ve done a lot of humanoid robotics over the years and sort of acquired or sold a bunch of companies with humanoid robotics. And now there are a zillion companies, sorry, not zillion, there are quite a few companies doing humanoid robotics. And internally we sold groups that work on robotics in varying forms. So what are my thoughts about that? You know in general I worked on X prior to this sort of new AI wave. There the focus was more hardware projects for sure. But obviously I guess I found the hardware independent edit. Hardware is much more difficult kind of on a technical basis, on a business basis in every way. So I’m not discouraging people from doing it. We need people for sure to do it. At the same time while the software and the AIs are getting so much faster at such a high rate, I guess to me that feels like that’s kind of the rocket ship. And I feel like if I get distracted in a way by making hardware for today’s AIs, that might not be the best of use at times compared to what is the next kind of level of AI I could be able to support. For that matter it will design a robot for me. That’s my first hope. There are a bunch of people at Google and Alphabet who can work on hardware. Yes.

Question: So continuing and evaluating web news is really wonderful. What’s your take on how everything and what you just talked about is very much in the context of the way people do it?

Sergey: The question about advertising. Yeah, I, of all people, am not too terribly concerned about business model shifts. I think it’s a little bit… I think it’s wonderful that we’ve been now for 25 years or whatever able to give just world-class information search for free to everyone. And that’s supported by advertising, which in my mind is great. It’s great for the world. You know, a kid in Africa has just as much access to basic information as the President of the United States or what have you. So that’s good. At the same time, I expect business models are going to evolve over time. And maybe it will still be advertising because whatever the advertising kind of works better. The AI is able to tailor it better. I like it. But even if it happens to move to, you know, now we have Gemini Advanced, other companies have their paid models. I think the fundamental issue is that you’re delivering a huge amount of value. You know, displacing all the mental effort that would have been required to take the place of that AI, whether in your time or labor or what have you, is enormous. And the same thing was true in search. So I personally feel as long as there’s huge value being generated, we’ll figure out the business models. Yeah.

Question: On, I think, the model of, what was your other topic, but a long ago Google had this Google Glass, but now Apple has Vision Pro. I think Google Glass may be a little bit early, would you consider like trying to give that another shot?

Sergey: I feel like I have some Google Glass. No, no, but I feel like I made some bad decisions. Yeah, it was for sure early, and early in two senses of the word. Maybe early in the overall evolution of technology, but also, I think I, like, in hindsight I tried to push it as a product, but it was sort of more of a prototype, and I should have set those expectations around it, and I just didn’t know much about consumer hardware supply chains back then, and a bunch of things I wish I’d done differently. But I personally am still a fan of kind of the lightweight, kind of minimal display that that offered, like the QWER-LA versus the heavy things that we have today. That’s my personal preference, but the Apple Vision and the Oculus, for the matter, they’re very impressive, like having played with them, and I’m just impressed by what you can have in front of your screen. That was what I was personally going for, kind of back then.

Question: Where do you see Google search going?

Sergey: Where do I see Google search going? Well, it’s a super exciting time for search because your ability to answer questions through AI is just so much greater. I think it’s the bigger opportunity is in situations where you are recall limited. Like, you might ask a very specialized question or it’s related to your own personal situation in a way that nobody out there on the internet has already written about. And for the questions that a million people have written about already and thought deeply about, it’s probably not as big a deal. But the things that are very specific to what you might care about right now in a particular way, that’s a huge opportunity. And you can imagine all kinds of products in your eyes and different ways to deliver that. But basically AI is the neighbor just doing a much better job in that case. Okay, last question.

Question: I’ll probably start this opportunity by saying that you are a company that is very unique. So how can AI be something towards the top? Mortality. Mortality.

Sergey: Mortality? Oh. Okay. I’m probably not as well versed as all of you are, to be honest. But I’ve definitely seen the molecular AI make huge amounts of progress. You could imagine that there would also be a lot of progress that I haven’t seen yet on the epidemiology side of things, to just be able to get kind of a more honest, better controlled, broader understanding of what’s happening to people’s health around the world. But yeah, I’ll give you a good answer on the last one. I don’t have a brilliant immortality key just by AI, just like that. But it’s the kind of field that for sure benefits from AI, whether you’re a researcher or like, I want it to just summarize articles to me, just step one. But in the future, I would expect the AI would actually give you novel hypotheses to test. It does that today with the alphafolks of the world, but maybe in more complex systems than just molecules. Okay. Thank you.

Get The Memo

by Dr Alan D. Thompson · Be inside the lightning-fast AI revolution.Bestseller. 10,000+ readers from 142 countries. Microsoft, Tesla, Google...

Artificial intelligence that matters, as it happens, in plain English.

Get The Memo.

Dr Alan D. Thompson is an AI expert and consultant, advising Fortune 500s and governments on post-2020 large language models. His work on artificial intelligence has been featured at NYU, with Microsoft AI and Google AI teams, at the University of Oxford’s 2021 debate on AI Ethics, and in the Leta AI (GPT-3) experiments viewed more than 4.5 million times. A contributor to the fields of human intelligence and peak performance, he has held positions as chairman for Mensa International, consultant to GE and Warner Bros, and memberships with the IEEE and IET. Technical highlights.

Dr Alan D. Thompson is an AI expert and consultant, advising Fortune 500s and governments on post-2020 large language models. His work on artificial intelligence has been featured at NYU, with Microsoft AI and Google AI teams, at the University of Oxford’s 2021 debate on AI Ethics, and in the Leta AI (GPT-3) experiments viewed more than 4.5 million times. A contributor to the fields of human intelligence and peak performance, he has held positions as chairman for Mensa International, consultant to GE and Warner Bros, and memberships with the IEEE and IET. Technical highlights.This page last updated: 4/Mar/2024. https://lifearchitect.ai/sergey/↑

- 1

- 2Prompt: Split the content of this transcript up into paragraphs with logical breaks. Add newlines between each paragraph. Improve spelling and grammar. The speaker is Sergey: and add Question: whenever an audience member poses a question.